A powerful GPU is essential for running modern games, but how much do you know about its long and fascinating history? The evolution of the Graphics Processing Unit (GPU) is a journey of technological breakthroughs that transformed gaming from simple pixelated screens to the highly immersive and visually stunning experiences we enjoy today.

1970s: The Birth of Gaming Graphics

The origins of GPUs trace back to the early days of arcade gaming. During this era, 3D graphics were managed by early display controllers, such as video shifters and video address generators. These devices were the predecessors of today’s sleek and powerful GPUs, serving the singular purpose of managing on-screen graphics.

The 1970s saw significant innovations from companies like Motorola, RCA, and LSI. In 1976, RCA introduced the “Pixie” video chip, capable of producing an NTSC-compatible video signal at a resolution of 62x128—a considerable achievement at the time. Similarly, Motorola’s video address generators laid the groundwork for the Apple II, one of the earliest personal computers. LSI also made significant contributions with ANTIC, an interface controller used in the Atari 400, which supported smooth scrolling and playfield graphics.

One of the major milestones was the release of Namco’s Galaxian arcade system in 1979. This system featured advanced graphics hardware supporting RGB color, tilemap backgrounds, and custom video hardware. It became a cornerstone of what is now known as the “golden age of arcade video games,” setting the stage for the future of gaming graphics.

1980s: The Rise of Graphics Processors

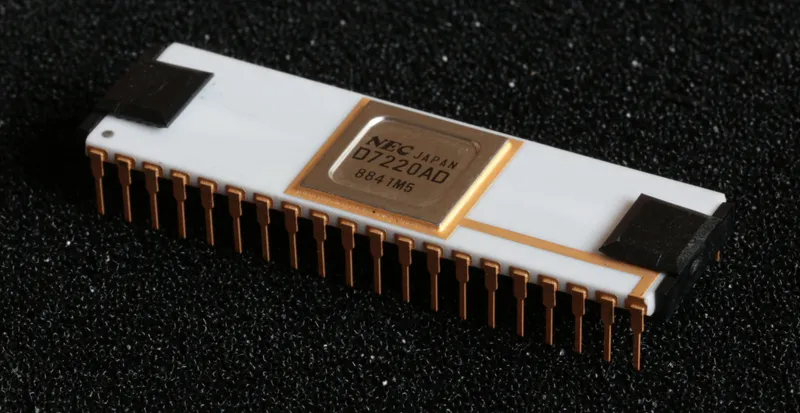

The 1980s were a transformative decade for graphics technology, not just in gaming but also in personal computing. This period saw the introduction of the first fully integrated Very-Large-Scale Integration (VLSI) graphics display processor for PCs, the NEC µPD7220. This processor paved the way for more complex and programmable graphics solutions.

Companies like IBM and Intel began to push the boundaries of what graphics hardware could do. IBM incorporated monochrome and color display adapters into their systems, enhancing the clarity of video and images. Intel followed suit with the ISBX 275 Video Graphics Controller Multimodule Board, which could display multiple colors and higher resolutions, marking a significant leap forward in visual fidelity.

Meanwhile, a trio of Hong Kong immigrants founded ATI Technologies in Canada, which would go on to become a leader in the graphics card market. The decade ended with a bang when Fujitsu released the FM Towns computer in 1989, boasting a staggering 16,777,216 color palette—a harbinger of the rich visuals to come in gaming.

1990s: The Dawn of Modern GPUs

The 1990s were a golden era for gaming and the true beginning of modern GPU technology. As the demand for real-time 3D graphics grew, companies began mass-producing 3D graphics hardware for the consumer market. Leading the charge was 3DFx, whose Voodoo 1 and Voodoo 2 graphics cards dominated the market and set new standards for gaming visuals.

However, it was Nvidia’s entry into the market that would redefine the landscape. In 1999, Nvidia released the GeForce 256, which the company dubbed the “world’s first GPU.” It supported 32-bit color and offered unparalleled 3D gaming performance. The GeForce 256 marked the beginning of a new era in which GPUs became a critical component for gaming, enhancing both the visual experience and the performance of video games.

2000s: The Era of Programmable Shading and Multi-GPU Setups

The 2000s were marked by rapid advancements in GPU technology. Nvidia introduced the first chip capable of programmable shading, which laid the foundation for modern pixel shading techniques. This technology allowed developers to create more detailed and realistic textures, revolutionizing the way games looked and felt.

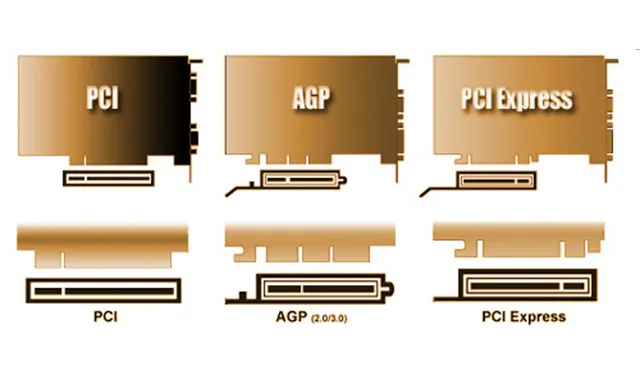

ATI, Nvidia’s main competitor, quickly followed suit, incorporating similar technology into their Radeon series. The decade also saw the transition from the aging AGP interface to the much faster PCI Express (PCIe), which remains the standard today.

Another significant development was the introduction of multi-GPU setups, with Nvidia’s SLI (Scalable Link Interface) and ATI’s Crossfire technology. These innovations allowed multiple graphics cards to work together, significantly boosting performance and enabling even more complex and detailed game environments.

2010s and Beyond: The Age of Ray Tracing and AI

As we moved into the 2010s, GPUs became more powerful and versatile than ever before. Nvidia and AMD continued to push the boundaries, introducing technologies like G-Sync and FreeSync to eliminate screen tearing and stutter, and EyeFinity for multi-display gaming experiences.

The most significant breakthrough, however, was the introduction of real-time ray tracing and AI-assisted supersampling. Nvidia’s RTX series, launched in 2018, brought real-time ray tracing to consumer graphics cards, fundamentally changing the way light and reflections are rendered in games. This technology, dubbed the “Holy Grail” of graphics by Nvidia, offers unprecedented realism in gaming.

AMD has also made strides, with their Radeon Image Sharpening technology enhancing the clarity of images, making 4K gaming more accessible. Both companies are now leveraging AI to improve gaming performance, ensuring that the future of gaming looks brighter—and sharper—than ever.

Conclusion

The evolution of GPUs is a testament to the relentless pursuit of better graphics and more immersive gaming experiences. From the rudimentary display controllers of the 1970s to the sophisticated, AI-powered GPUs of today, each decade has brought innovations that have reshaped the gaming landscape. As we look to the future, one thing is certain: GPUs will continue to be at the heart of gaming, driving the industry forward with every new technological leap.